The nCov-Group Data Repository

Targeting SARS-CoV-2 with AI- and HPC-enabled Lead Generation

This repository provides access to data, models, and code produced by the nCoV Group in support of research aimed at generating leads for potential SARS-CoV-2 drugs. The data include representations and computed descriptors for around 4.2 billion small molecules: some 60 TB of data in all, although many useful subsets are much smaller.

These data will be updated regularly as the collaboration produces new results. Shared data are located on the nCov-Group Data Repository endpoint at this location, from where they can be accessed via Globus. To access the data, users should: 1) log in with existing credentials (link) and 2) access the Globus endpoint.

Papers

Targeting SARS-CoV-2 with AI- and HPC-enabled Lead Generation: A First Data Release

IMPECCABLE: integrated modeling pipelinE for COVID cure by assessing better LEads

High-Throughput Virtual Screening and Validation of a SARS-CoV-2 Main Protease Noncovalent Inhibitor

Table of contents

- Papers

- Data Processing Pipeline

- Dataset Catalog

- Dataset Downloads

- Methodology and Data Processing Pipeline

- Data Extraction from Literature

- Acknowledgements

- Disclaimer

Data Processing Pipeline

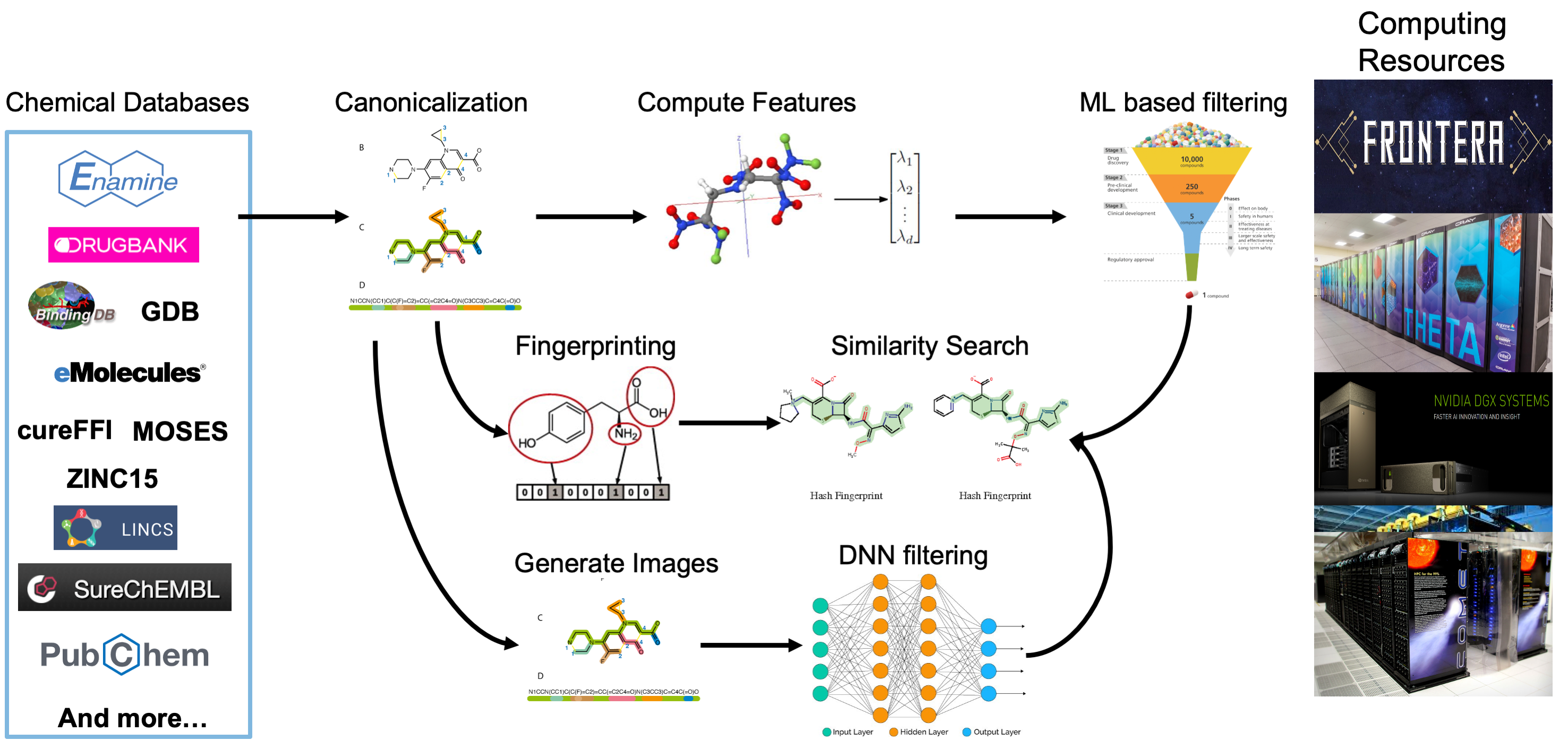

The data processing pipeline is used to compute different types of features and representations of billions of small molecules. The pipeline is first used to convert the SMILES representation for each molecule to a canonical SMILES to allow for de-duplication and consistency across data sources. Next, for each molecule, three different types of features are computed: 1) molecular fingerprints that encode the structure of molecules; 2) 2D and 3D molecular descriptors; and 3) 2D images of the molecular structure. These features are being used as input to various machine learning and deep learning models that will be used to predict important characteristics of candidate molecules including docking scores, toxicity, and more.

Figure 1: The computational pipeline that is used to enrich the data collected from includeddatasets. After collection, each molecule in each dataset has canonical SMILES, 2D and 3D molecular features, fingerprints, and images computed. These enrichments simplifymolecule disambiguation, ML-guided compound screening, similarity searching, and neuralnetwork training respectively.

Figure 1: The computational pipeline that is used to enrich the data collected from includeddatasets. After collection, each molecule in each dataset has canonical SMILES, 2D and 3D molecular features, fingerprints, and images computed. These enrichments simplifymolecule disambiguation, ML-guided compound screening, similarity searching, and neuralnetwork training respectively.

Dataset Catalog

We have obtained molecule definitions from the following source datasets. For each, we provide a link to the original source, the number of molecules included from the dataset, and the percentage of those molecules that are not found in any other listed dataset.

Notes:

- The key for each dataset may be used in filenames in place of the full name in downloads elsewhere.

- The numbers above may be less than what can be found at the source, due to conversion failures and/or version differences.

- These numbers do not account for de-duplication, within or between datasets.

Dataset Downloads

Follow the links below to access canonical SMILES, molecular fingerprints, descriptors, and images (png format) for each dataset. The links in the final row provide access to all SMILES, fingerprints, descriptors, and images, respectively.

Methodology and Data Processing Pipeline

Canonical Molecular Structures

We use Open Babel v3.0 to convert the simplified molecular-input line-entry system (SMILES)specifications of chemical species obtained from various sources into a consistent canonical smilesrepresentation. We organize the resulting molecule specifications in one directory per source dataset, each containing one CSV file with columns [SOURCE-KEY, IDENTIFIER, SMILES],where SOURCE-KEY identifies the source dataset; IDENTIFIER is an identifier either obtainedfrom the source dataset or, if none such is available, defined internally; and SMILES is a canon-ical SMILES as produced by Open Babel. Identifiers are unique within a dataset, but may notbe unique across datasets. Thus, the combination of (SOURCE-KEY, IDENTIFIER) is needed to identify molecules uniquely. We obtain the canonical SMILES by using the following Open Babel command

obabel {inputfilename} -O{outputfilename} -ocan -e

Molecular Fingerprints

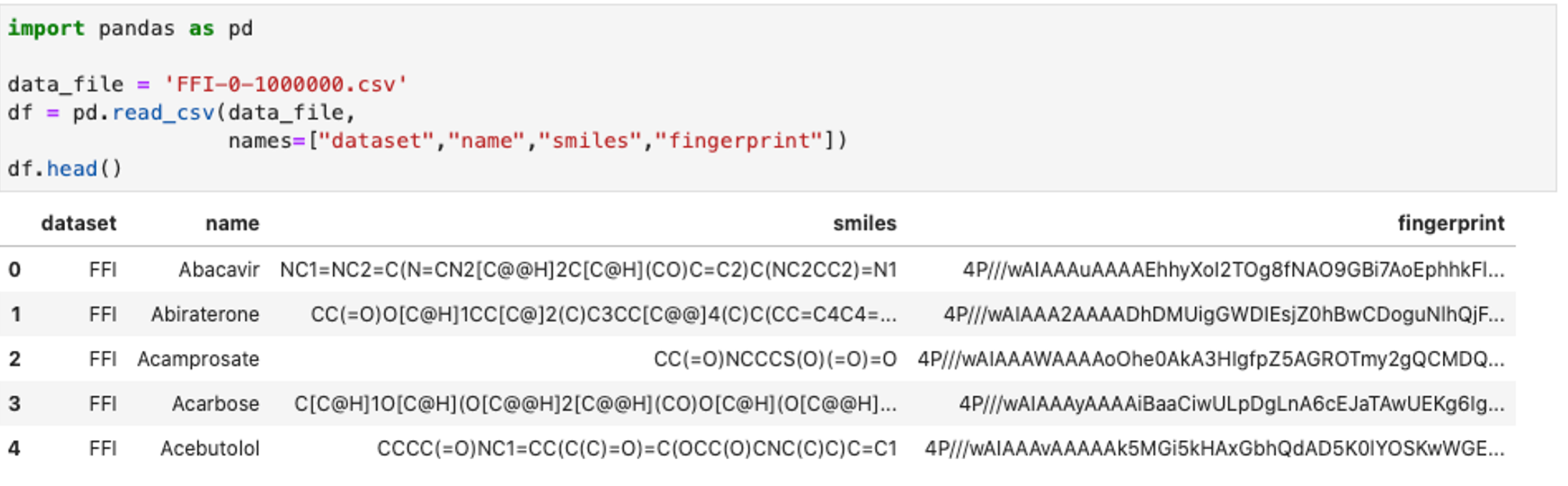

We use RDKit (version 2019.09.3) to compute a 2048-bit fingerprint for each molecule. Weorganize these fingerprints in CSV files with each row with columns [SOURCE-KEY, IDENTI-FIER, SMILES, FINGERPRINT], where SOURCE-KEY, IDENTIFIER, and SMILES are asdefined in Table 2, and FINGERPRINT is a Base64-encoded representation of the fingerprint. In Figure 2, we show an example of how to load the fingerprint data from a batch file within individual dataset using Python 3. Further examples of how to use fingerprints are available inthe accompanying GitHub repository.

Figure 2: A simple Python code example showing how to load data from a fingerprint file.(This and other examples are accessible in the accompanying GitHub repository.

Figure 2: A simple Python code example showing how to load data from a fingerprint file.(This and other examples are accessible in the accompanying GitHub repository.

Molecular Descriptors

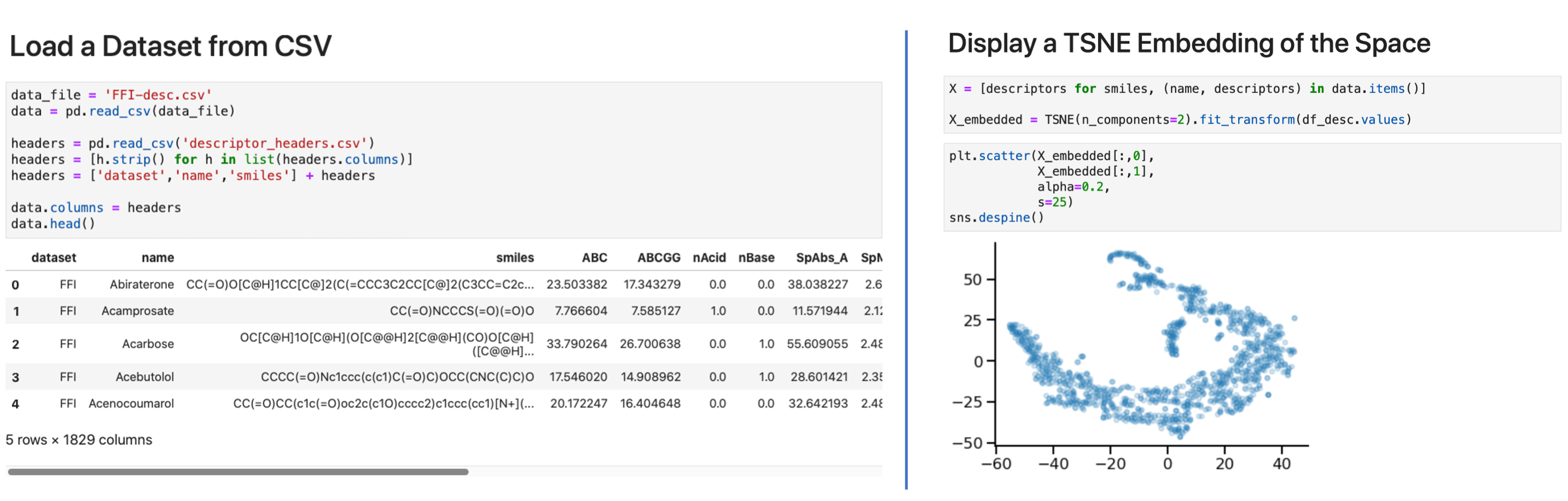

We generate molecular descriptors using Mordred(version 1.2.0). The collected descriptors(∼1800 for each molecule) include descriptors for both 2D and 3D molecular features. Weorganize these descriptors in one directory per source dataset, each containing one or moreCSV files. Each row in the CSV file has columns [SOURCE-KEY, IDENTIFIER, SMILES, DESCRIPTOR_1… DESCRIPTOR_N]. In Figure 3, we show how to load the data for anindividual dataset (e.g., FFI) using Python 3 and explore its shape (Figure 3-left), and create a TSNE embedding to explore the molecular descriptor space (Figure 3-right).

Figure 3: Molecular descriptor examples: (left) load descriptor data and (right) create asimple TSNE projection of the FFI dataset.

Figure 3: Molecular descriptor examples: (left) load descriptor data and (right) create asimple TSNE projection of the FFI dataset.

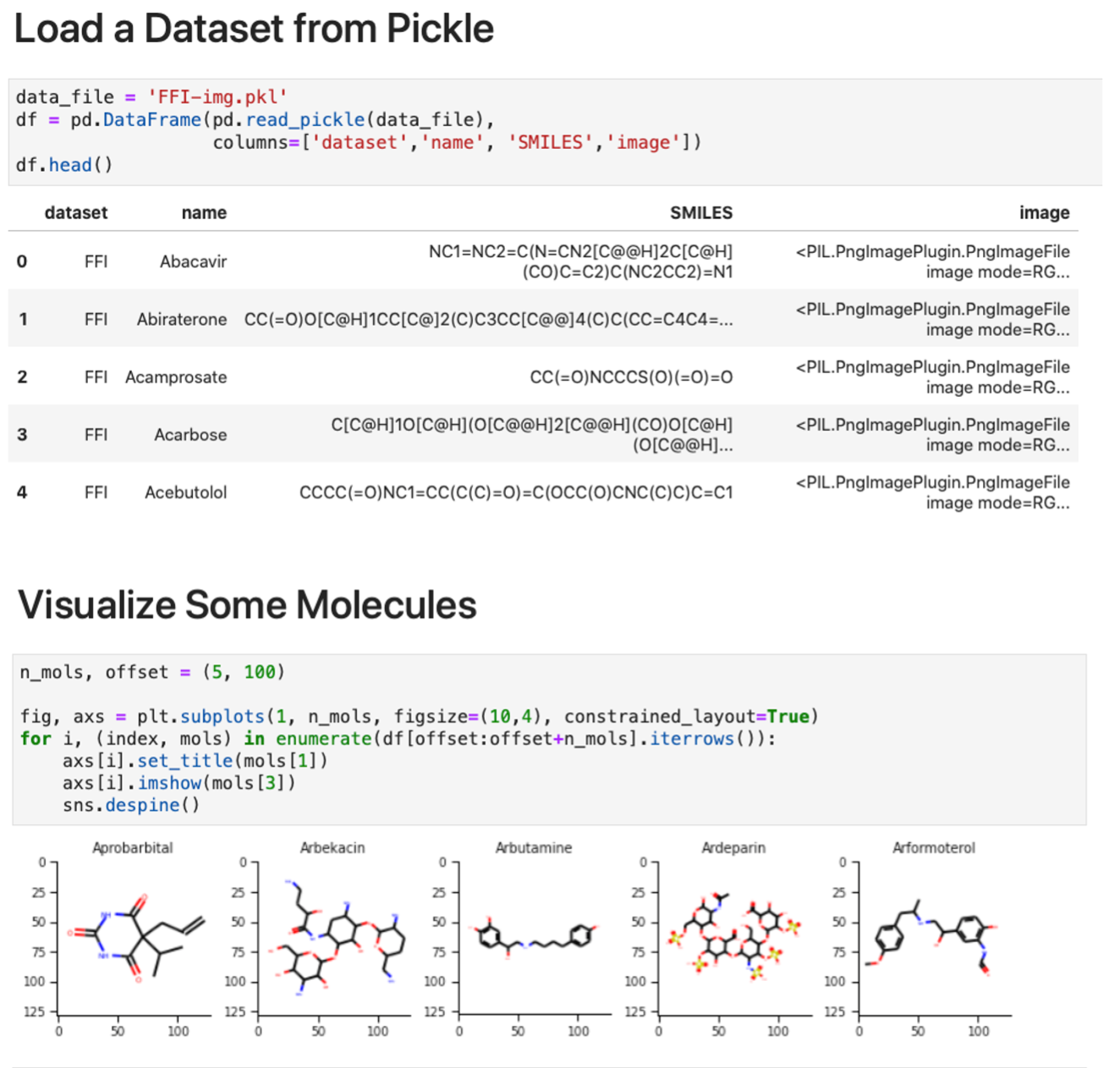

Molecular Images

Images for each molecule were generated using a custom script [44] to read the canonical SMILES structure with RDKit, kekulize the structure, handle conformers, draw the molecule with rd-kit.Chem.Draw, and save the file as a PNG-format image with size 128×128 pixels. For each dataset, individual pickle files are saved containing batches of 10 000 images for ease of use, with entries in the format (SOURCE, IDENTIFIER, SMILES, image in PIL format). In Figure 4, weshow an example of loading and display image data from a batch of files from the FFI dataset.

Figure 4: Molecular image examples. The examples show how to (top) load the data and (bottom) display a subset of the images using matplotlib.

Data Access

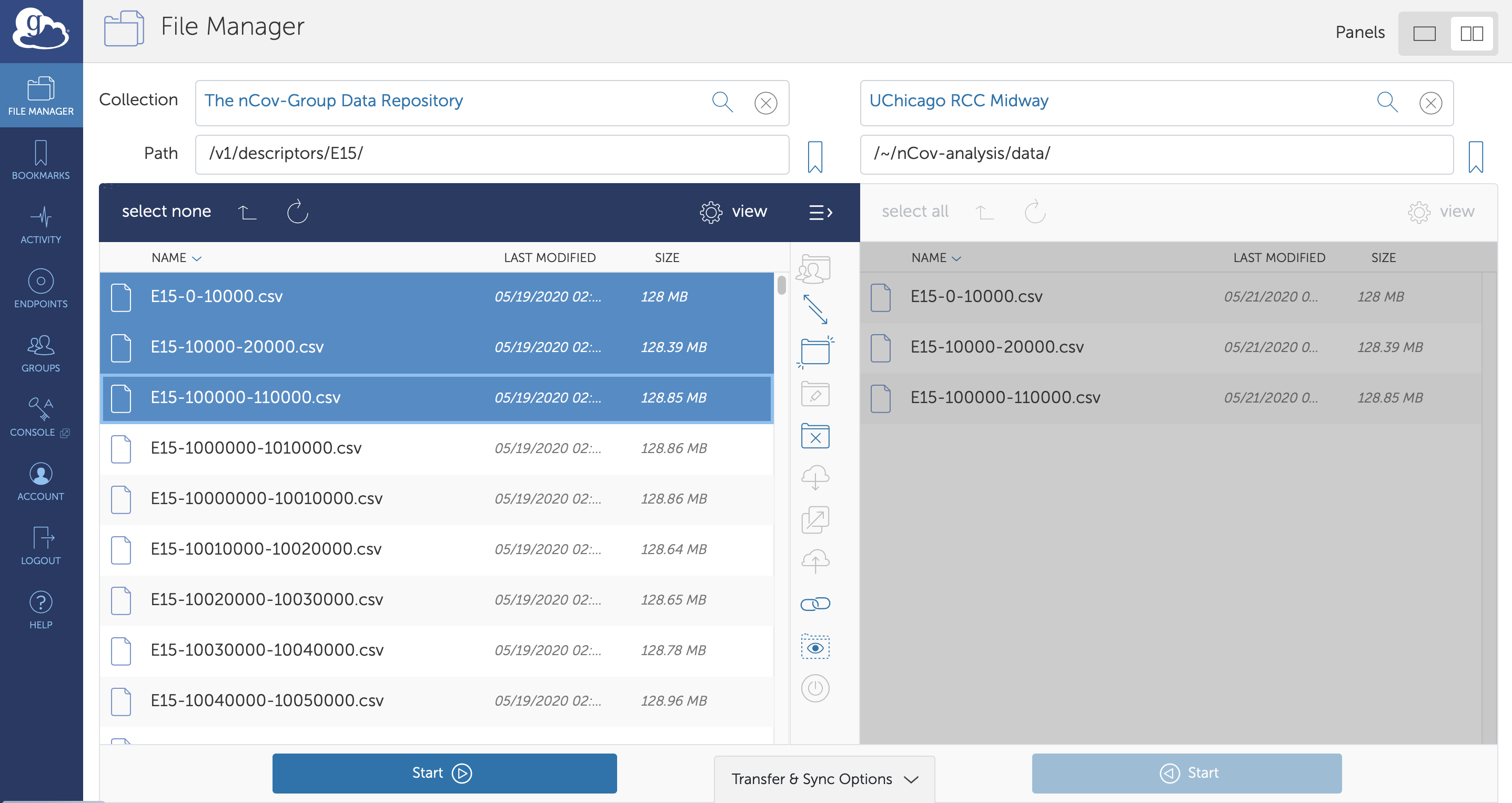

Providing access to such a large quantity of heterogeneous data (currently ~60 TB) is challenging. We use Globus to handle authentication and authorization, and to enable high-speed,reliable access to the data. Access to this data is available to anyone following authentication via institutional credentials, an ORCID profile, a Google account, or many other common identities. Users can access the data through a web user interface shown in Fig. 5, facilitating easy browsing, direct download via HTTPS of smaller files, and high-speed, reliable transfer of larger data files to their laptop or a computing cluster via Globus Connect Personal or an instance of Globus Connect Server. There are more than 20 000 active Globus endpoints distributed around the world. Users may also access the data with a full-featured Python SDK. More details on Globus can be found at https://www.globus.org.

Figure 5: Data access with Globus. All data are stored on Globus endpoints, allowing usersto access, move, and share the data through a web interface (pictured above), a REST API, or with a Python client. The user here has just transferred the first three files of descriptors associated with the E15 dataset to an endpoint at UChicago.

Figure 5: Data access with Globus. All data are stored on Globus endpoints, allowing usersto access, move, and share the data through a web interface (pictured above), a REST API, or with a Python client. The user here has just transferred the first three files of descriptors associated with the E15 dataset to an endpoint at UChicago.

Code

Code to help users understand the methodology and use the data are included in the Globus Labs Covid Analyses GitHub repository.

Data Extraction from Literature

The data extraction team is working to extract a set of known antiviral molecules that have been previously tested against coronaviruses. This set of molecules will inform future efforts to screen candidates using simulated docking and more. There are two efforts current underway, a manual extraction effort, and an effort to build a named-entity recognition model that aims to automatically from a much larger literature corpus.

Named-Entity Recognition Models for Identification of Antivirals

Researchers worldwide are seeking to repurpose existing drugs or discover new drugs to counter the disease caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). A promising source of candidates for such studies is molecules that have been reported in the scientific literature to be drug-like in the context of coronavirus research. We report here on a project that leverages both human and artificial intelligence to detect references to drug-like molecules in free text. We engage non-expert humans to create a corpus of labeled text, use this labeled corpus to train a named entity recognition model, and employ the trained model to extract 10912 drug-like molecules from the COVID-19 Open Research Dataset Challenge (CORD-19) corpus of 198875 papers. Performance analyses show that our automated extraction model can achieve performance on par with that of non-expert humans.

Manual Extraction of Antivirals from Literature

Babuji, Y., Blaiszik, B., Chard, K., Chard, R., Foster, I., Gordon, I., Hong, Z., Karbarz, K., Li, Z., Novak, L., Sarvey, S., Schwarting, M., Smagacz, J., Ward, L., & Orozco White, M. (2020). Lit - A Collection of Literature Extracted Small Molecules to Speed Identification of COVID-19 Therapeutics [Dataset]. Materials Data Facility. https://doi.org/10.26311/LIT

Acknowledgements

Research was supported by the DOE Office of Science through the National Virtual Biotechnology Laboratory, a consortium of DOE national laboratories focused on response to COVID-19, with funding provided by the Coronavirus CARES Act.

Data storage and computational support for this research project has been generously supported by the following resources. The data generated have been prepared as part of the nCov-Group Collaboration, a group of over 200 researchers working to use computational techniques to address various challenges associated with COVID-19.

Petrel Data Service at the Argonne Leadership Computing Facility (ALCF)

This research used resources of the Argonne Leadership Computing Facility, a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.

Theta at the Argonne Leadership Computing Facility (ALCF)

This research used resources of the Argonne Leadership Computing Facility, a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.

Frontera at the Texas Advanced Computing Center (TACC)

Comet at the San Diego Supercomputing Center (SDSC)

Data and Computing Infrastructure

Many aspects of the data and computing infrastructure have been leveraged from other projects including but not limited to:

Data processing and computation:

- ExaLearn and the Exascale Computing Project

- Parsl: parallel scripting libarary (NSF 1550588)

- funcX: distributed function as a service platform (NSF 2004894)

Data Tools, Services, and Expertise:

- Globus: data services for science (authentication, transfer, users, and groups)

- CHiMaD: Materials Data Facility and Polymer Property Predictor Database (NIST 70NANB19H005 and NIST 70NANB14H012)

Disclaimer

For All Information

Unless otherwise indicated, this information has been authored by an employee or employees of the UChicago Argonne, LLC., operator of the Argonne National laboratory with the U.S. Department of Energy. The U.S. Government has rights to use, reproduce, and distribute this information. The public may copy and use this information without charge, provided that this Notice and any statement of authorship are reproduced on all copies.

While every effort has been made to produce valid data, by using this data, User acknowledges that neither the Government nor UChicago Argonne LLC. makes any warranty, express or implied, of either the accuracy or completeness of this information or assumes any liability or responsibility for the use of this information. Additionally, this information is provided solely for research purposes and is not provided for purposes of offering medical advice. Accordingly, the U.S. Government and UChicago Argonne LLC. are not to be liable to any user for any loss or damage, whether in contract, tort (including negligence), breach of statutory duty, or otherwise, even if foreseeable, arising under or in connection with use of or reliance on the content displayed on this site.